Biometrics And Neuro-Measurements For User Testing

(This article is sponsored by Adobe.) So it’s time to test the latest version of your app with users. You schedule your first user testing session. The participant enters the room; your lab partner puts velcro on the participant’s finger and fits a headband and head cap on before she sits down at a computer to start the user test session. What’s all this for? It’s biometrics and neuro-measurements.

In a “traditional” user test, you put a participant in front of your app, product, or software and give them tasks to do, ask them to “think aloud”, and observe and record what they say and what they do. You may ask them some questions before and after the session, too. I’ve done thousands of these sessions, and chances are that if you are a user researcher, you have to.

There’s nothing really wrong with user testing this way except that it relies on the participant telling you (either during or after the session) why they did what they did, and how they feel about the product or app. You can see that they clicked on a particular button or touched a link on the mobile app, but if they explain why, you are only getting the conscious reason why.

People filter their feelings, decisions and reasons consciously.

“

What if you could get their unconscious reactions? What if you could take a look inside your users’ brains and see what it is they aren’t saying, i.e. the things they themselves may not realize about their reactions to your product?

We know that most mental processing — including decision-making and emotional reactions — occurs unconsciously. So if people tell you how they feel and why they did something, it is possible that they believe what they are saying is the truth, but it’s also possible that they don’t know how they feel or why they did or did not take an action.

People filter their feelings, decisions and reasons consciously and by that time you aren’t necessarily getting real data. Add to that the fact that users aren’t always truthful during user tests. They may not want to offend you by telling you they think your product is hard to use or boring.

So that’s why user researchers are starting to use some other tools to get reactions and data directly from the body without the filtering of conscious thought. Hence, biometrics and neuro-measurements.

Some of these new tools are easy and inexpensive to use. Others may take more investment of your time and budget. Or you may want to bring in an outside firm that specializes in these tools. (Some suggestions for outside vendors are at the end of the article.)

Let’s take a look at what’s available.

- Galvanic Skin Response (GSR)

- Respiration

- Heart Rate

- Eye Tracking

- Facial Coding

- fEMG (Electromyography)

- EEG (Electroencephalography)

Galvanic Skin Response (GSR)

GSR is also called “electrodermal activity” or EDA. A typical GSR measurement device is a relatively small, unobtrusive sensor that is connected to the skin of your finger or hand.

Sweat glands on the hands are very sensitive to changes in your emotional state. If you become emotionally aroused — either positively or negatively — then you will release more sweat in your hands. Sometimes, these are very small changes that you may not notice. This is what a GSR monitor is measuring.

The GSR monitor can’t tell if you are happy, sad, scared, and so on, but it can tell if you are becoming more or less emotional. And since the amount of sweat you release is not under conscious control, a GSR monitor can measure what you may not be consciously aware of.

GSR monitoring has been around for over a hundred years. The monitors are relatively inexpensive and easy to learn how to use. The price for a GSR monitor ranges from about $150 to $600, depending on the brand and model you get. If you want to buy your own, check out Carolina Supply. iMotions also has a great downloadable guide to GSR monitors that you can get for free.

Recommended reading: How People Make Decisions

Respiration

It’s also relatively easy to measure respiration. When people are emotionally aroused they breathe faster. This can be detected in several ways — the easiest being to place a cloth band around the chest and/or stomach and measure the expansion of the chest or stomach as people breathe.

If/when they are using your product and they start breathing faster, you can deduce that something has (either positively or negatively) affected them emotionally.

Heart Rate

You can also use the band around the chest or even a simpler measurement on a finger to measure heart rate/pulse. When you are emotionally aroused, your heart beats faster and your pulse increases.

How would you use GSR, respiration, or heart rate data in a user test or study? Let’s say you are testing an app for getting an insurance quote. You ask the user what they think of the insurance quote app, and they answer:

“It was OK, it wasn’t too hard to use.”

But looking at their GSR, respiration, and/or heart rate might tell you that they were stressed. The data will also show you when and where in the process they had the most stress.

Like GSR monitors, heart-rate and respiration monitors are relatively inexpensive (under $100). What you may really want, however, is a total package that includes, a universal monitor that you can plug more than one measurement into.

For example, you can use GSR, heart rate, respiration and even EEG (discussed below), plus software that lets you monitor the data and combine it with actions your users are taking at specific moments during your user study. These packages will cost you a lot, however. A whole system may run as much as $7,000.

To get started, you may want to bring in a vendor who has the equipment to get your feet wet before you decide to buy these tools for your lab.

Eye Tracking

I am probably unusual in my criticisms of eye-tracking. A lot of people like eye tracking, but I think it has some problems. I’ll explain why.

Eye tracking involves having people look at a special monitor while wearing eye-tracking headsets/glasses. The eye tracker measures what you look at and how long you look at it. If you were doing user testing on a web page, then you could see (either for an individual or through aggregated data) where people looked most, how long they looked at it, and what people did not look at, and so on.

Eye tracking works just fine in measuring what it is measuring. But here’s my criticism: Eye tracking only measures where people are looking with their central vision. It doesn’t measure peripheral vision.

Recent research on peripheral vision shows that peripheral vision is more important than once thought for information process. For example, images of danger and emotion are processed faster in peripheral vision than in central vision. We also know now that people use peripheral vision to decide if they are the right place, or in the case of software and website design, if they are at the right page or screen. It’s possible for people to “see” something in peripheral vision, but not be consciously aware that they have. And what they see can influence the action they take.

Since eye tracking doesn’t track any peripheral vision data, I am not a big fan of it. Monitors with eye tracking built in, plus the software to analyze and report on the data can cost around $7,000 to $10,000.

“

Facial Coding

Cameras can capture someone’s face as they use a product or watch a video. Algorithms can then analyze the facial expressions and tell you whether the person is confused, happy, scared, and so on.

Facial coding is also an “add-on” feature to eye tracking. You should assume similar pricing ($7,000 to $10,000) for facial coding as for eye tracking

fEMG

EMG stands for Electromyography, or muscle movement. Whenever a muscle contracts it generates a small amount of electricity which can be detected with some fairly simple electrodes. Muscle movement can be very small — you may not see the muscle move, but you can measure it.

This means that some of the most interesting EMG measurements come from the movement of muscles in the face or fEMG. Facial coding uses algorithms to take a good guess at what the person is feeling, but with fEMG you can actually measure the muscles in the face and thereby more accurately assess the emotion that the person is feeling. There is muscle activity in the face that a video won’t detect, but that the fEMG recordings will detect. This means that with fEMG you can pick up on emotions that are not being obviously displayed through just facial coding.

When would you use facial coding or fEMG?

Well, let’s say you have created some new videos for the careers/employment page of your company’s website. The videos have real people who work at the company talking about how they came to be an employee, and what it is they like about working at the company. You want to know if people like and resonate with the videos. Facial coding and, even better, fEMG, would help you measure what people are feeling, and even tell you which parts of the video are eliciting which emotions.

fEMG equipment and software are expensive and not easy to learn how to use. For this reason, you will probably want to start by bringing in a vendor rather than using this on your own.

EEG (Electroencephalography)

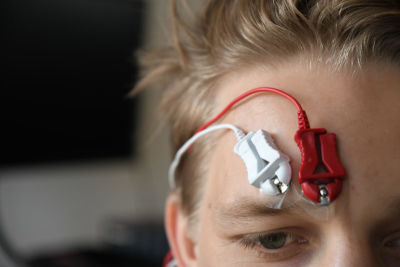

You can directly measure the electrical activity of the brain by placing electrodes on the scalp. EEG devices measure the electrical activity generated by neurons.

EEG measures electrical changes on the surface of the brain — not deep within particular brain structures. This means that EEG can’t tell you that a particular part of the brain is active. It can only tell you when there is more or less brain activity. You would need to use more sophisticated methods, such as fMRI (functional Magnetic Resonance Imaging) to study more specific brain activity. fMRI equipment is very large and very expensive, which is why only research and medical institutions use them. In contrast, EEG is inexpensive.

EEG measures whether a person is engaged and paying attention. EEG measurements are particularly good at showing you activity by seconds or even parts of a second. Let’s go back to the example of the user test to measure the impact of the employee story videos at the careers/jobs page of the corporate website. Are the videos interesting? Do people pay attention while watching them? Exactly which parts of the videos are engaging? EEG can tell you this.

When I was in graduate school and doing EEG research, we had to use electrodes and gel to get EEG readings, but now there are easier ways. You can place a cap on someone’s head, kind of like a swim cap, and the electrodes are built in to the cap.

Some devices are like headsets rather than swim caps:

EEG devices range from the inexpensive to the expensive. For example, Emotiv makes a $299 EEG headset. You will probably, however, want to get a higher end version for $799, and then you will need a subscription for the software ($99 a month).

It can take a while to learn how to accurately read EEG data, so, again, it might be better to start by bringing in a vendor who has all the equipment and know-how until you learn.

Recommended reading: Grabbing Visual Attention With The Visual Cortex

Combining Measurements

It is common to combine multiple methods of biometrics together to help with the accuracy and interpretation of the results.

Although biometrics and neuro-measurements don’t tell the whole story, the data that we get from biometrics and neuro-measurements is more accurate than self-reporting. As the tools become easier to use and researchers get used to using them, they will become more common. We may even get to the point where we stop using the think-aloud technique altogether, although I don’t think we are there yet!

Takeaways

- If you haven’t already researched biometrics for your user testing projects, now is a good time to check out these measurements as an addition to your current testing.

- Pick a modality and/or a vendor and do a trial project.

- If you are in charge of user-testing budgets, add in some biometrics to your budgeting process for the next year or two so you can get started.

Vendors

Vendors to consider for a biometric study:

This article is part of the UX design series sponsored by Adobe. Adobe XD tool is made for a fast and fluid UX design process, as it lets you go from idea to prototype faster. Design, prototype and share — all in one app. You can check out more inspiring projects created with Adobe XD on Behance, and also sign up for the Adobe experience design newsletter to stay updated and informed on the latest trends and insights for UX/UI design.

Further Reading

- Conducting Accessibility Research In An Inaccessible Ecosystem

- Using AI For Neurodiversity And Building Inclusive Tools

- Designing For Attention

- Long Live The Test Pyramid