Web Accessibility In Context

Haben Girma, disability rights advocate and Harvard Law’s first deafblind graduate, made the following statement in her keynote address at the AccessU digital accessibility conference last month:

“I define disability as an opportunity for innovation.”

She charmed and impressed the audience, telling us about learning sign language by touch, learning to surf, and about the keyboard-to-braille communication system that she used to take questions after her talk.

Contrast this with the perspective many of us take building apps: web accessibility is treated as an afterthought, a confusing collection of rules that the team might look into for version two. If that sounds familiar (and you’re a developer, designer or product manager), this article is for you.

I hope to shift your perspective closer to Haben Girma’s by showing how web accessibility fits into the broader areas of technology, disability, and design. We’ll see how designing for different sets of abilities leads to insight and innovation. I’ll also shed some light on how the history of browsers and HTML is intertwined with the history of assistive technology.

Assistive Technology

An accessible product is one that is usable by all, and assistive technology is a general term for devices or techniques that can aid access, typically when a disability would otherwise preclude it. For example, captions give deaf and hard of hearing people access to video, but things get more interesting when we ask what counts as a disability.

On the ‘social model’ definition of disability adopted by the World Health Organization, a disability is not an intrinsic property of an individual, but a mismatch between the individual’s abilities and environment. Whether something counts as a ‘disability’ or an ‘assistive technology’, doesn’t have such a clear boundary and is contextual.

Addressing mismatches between ability and environment has lead to not only technological innovations but also to new understandings of how humans perceive and interact with the world.

Access + Ability, a recent exhibit at the Cooper Hewitt Smithsonian design museum in New York, showcased some recent assistive technology prototypes and products. I’d come to the museum to see a large exhibit on designing for the senses, and ended up finding that this smaller exhibit offered even more insight into the senses by its focus on cross-sensory interfaces.

Seeing is done with the brain, and not with the eyes. This is the idea behind one of the items in the exhibit, Brainport, a device for those who are blind or have low vision. Your representation of your physical environment from sight is based on interpretations your brain makes from the inputs that your eyes receive.

What if your brain received the information your eyes typically receive through another sense? A camera attached to Brainport’s headset receives visual inputs which are translated into a pixel-like grid pattern of gentle shocks perceived as “bubbles” on the wearer’s tongue. Users report being able to “see” their surroundings in their mind’s eye.

Soundshirt also translates inputs typically perceived by one sense to inputs that can be perceived by another. This wearable tech is a shirt with varied sound sensors and subtle vibrations corresponding to different instruments in an orchestra, enabling a tactile enjoyment of a symphony. Also on display for interpreting sound was an empathetically designed hearing aid that looks like a piece of jewelry instead of a clunky medical device.

Designing for different sets of abilities often leads to innovations that turn out to be useful for people and settings beyond their intended usage. Curb cuts, the now familiar mini ramps on the corners of sidewalks useful to anyone wheeling anything down the sidewalk, originated from disability rights activism in the ’70s to make sidewalks wheelchair accessible. Pellegrino Turri invented the early typewriter in the early 1800s to help his blind friend write legibly, and the first commercially available typewriter, the Hansen Writing Ball, was created by the principal of Copenhagen’s Royal Institute for the Deaf-Mutes.

Vint Cerf cites his hearing loss as shaping his interest in networked electronic mail and the TCP/IP protocol he co-invented. Smartphone color contrast settings for color blind people are useful for anyone trying to read a screen in bright sunlight, and have even found an unexpected use in helping people to be less addicted to their phones.

So, designing for different sets of abilities gives us new insights into how we perceive and interact with the environment, and leads to innovations that make for a blurry boundary between assistive technology and technology generally.

With that in mind, let’s turn to the web.

Assistive Tech And The Web

The web was intended as accessible to all from the start. A quote you’ll run into a lot if you start reading about web accessibility is:

“The power of the Web is in its universality. Access by everyone regardless of disability is an essential aspect.”

— Tim Berners-Lee, W3C Director and inventor of the World Wide Web

What sort of assistive technologies are available to perceive and interact with the web? You may have heard of or used a screen reader that reads out what’s on the screen. There are also braille displays for web pages, and alternative input devices like an eye tracker I got to try out at the Access + Ability exhibit.

It’s fascinating to learn that web pages are displayed in braille; the web pages we create may be represented in 3D! Braille displays are usually made of pins that are raised and lowered as they “translate” each small part of the page, much like the device I saw Haben Girma use to read audience questions after her AccessU keynote. A newer company, Blitab (named for “blind” + “tablet”), is creating a braille Android tablet that uses a liquid to alter the texture of its screen.

People proficient with using audio screen readers get used to faster speech and can adjust playback to an impressive rate (as well as saving battery life by turning off the screen). This makes the screen reader seem like an equally useful alternative mode of interacting with web sites, and indeed many people take advantage of audio web capabilities to dictate or hear content. An interface intended for some becomes more broadly used.

Web accessibility is about more than screen readers, however, we’ll focus on them here because — as we’ll see — screen readers are central to the technical challenges of an accessible web.

Recommended reading: Designing For Accessibility And Inclusion by Steven Lambert

Technical Challenges And Early Approaches

Imagine you had to design a screen reader. If you’re like me before I learned more about assistive tech, you might start by imagining an audiobook version of a web page, thinking your task is to automate reading the words on the page. But look at this page. Notice how much you use visual cues from layout and design to tell you what its parts are for how to interact with them.

- How would your screen reader know when the text on this page belongs to clickable links or buttons?

- How would the screen reader determine what order to read out the text on the page?

- How could it let the user “skim” this page to determine the titles of the main sections of this article?

The earliest screen readers were as simple as the audiobook I first imagined, as they dealt with only text-based interfaces. These “talking terminals,” developed in the mid-’80s, translated ASCII characters in the terminal’s display buffer to an audio output. But graphical user interfaces (or GUI’s) soon became common. “Making the GUI Talk,” a 1991 BYTE magazine article, gives a glimpse into the state of screen readers at a moment when the new prevalence of screens with essentially visual content made screen readers a technical challenge, while the freshly passed Americans with Disabilities Act highlighted their necessity.

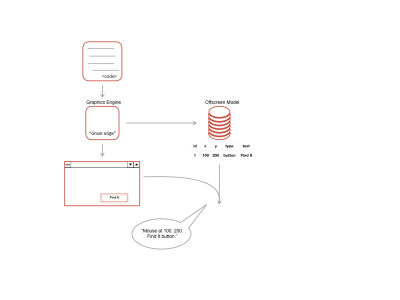

OutSpoken, discussed in the BYTE article, was one of the first commercially available screen readers for GUI’s. OutSpoken worked by intercepting operating system level graphics commands to build up an offscreen model, a database representation of what is in each part of the screen. It used heuristics to interpret graphics commands, for instance, to guess that a button is drawn or that an icon is associated with nearby text. As a user moves a mouse pointer around on the screen, the screen reader reads out information from the offscreen model about the part of the screen corresponding to the cursor’s location.

This early approach was difficult: intercepting low-level graphics commands is complex and operating system dependent, and relying on heuristics to interpret these commands is error-prone.

The Semantic Web And Accessibility APIs

A new approach to screen readers arose in the late ’90s, based on the idea of the semantic web. Berners-Lee wrote of his dream for a semantic web in his 1999 book Weaving the Web: The Original Design and Ultimate Destiny of the World Wide Web:

"I have a dream for the Web [in which computers] become capable of analyzing all the data on the Web — the content, links, and transactions between people and computers. A "Semantic Web", which makes this possible, has yet to emerge, but when it does, the day-to-day mechanisms of trade, bureaucracy, and our daily lives will be handled by machines talking to machines. The "intelligent agents" people have touted for ages will finally materialize."

Berners-Lee defined the semantic web as “a web of data that can be processed directly and indirectly by machines.” It’s debatable how much this dream has been realized, and many now think of it as unrealistic. However, we can see the way assistive technologies for the web work today as a part of this dream that did pan out.

Berners-Lee emphasized accessibility for the web from the start when founding the W3C, the web’s international standards group, in 1994. In a 1996 newsletter to the W3C’s Web Accessibility Initiative, he wrote:

"The emergence of the World Wide Web has made it possible for individuals with appropriate computer and telecommunications equipment to interact as never before. It presents new challenges and new hopes to people with disabilities."

HTML4, developed in the late ’90s and released in 1998, emphasized separating document structure and meaning from presentational or stylistic concerns. This was based on semantic web principles, and partly motivated by improving support for accessibility. The HTML5 that we currently use builds on these ideas, and so supporting assistive technology is central to its design.

So, how exactly do browsers and HTML support screen readers today?

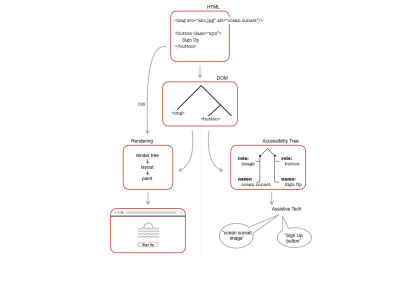

Many front-end developers are unaware that the browser parses the DOM to create a data structure, especially for assistive technologies. This is a tree structure known as the accessibility tree that forms the API for screen readers, meaning that we no longer rely on intercepting the rendering process as the offscreen model approach did. HTML yields one representation that the browser can use both to render on a screen, and also give to audio or braille devices.

Let’s look at the accessibility API in a little more detail to see how it handles the challenges we considered above. Nodes of the accessibility tree, called “accessible objects,” correspond to a subset of DOM nodes and have attributes including role (such as button), name (such as the text on the button), and state (such as focused) inferred from the HTML markup. Screen readers then use this representation of the page.

This is how a screen reader user can know an element is a button without making use of the visual style cues that a sighted user depends on. How could a screen reader user find relevant information on a page without having to read through all of it? In a recent survey, screen reader users reported that the most common way they locate the information they are looking for on a page is via the page’s headings. If an element is marked up with an h1–h6 tag, a node in the accessibility tree is created with the role heading. Screen readers have a “skip to next heading” functionality, thereby allowing a page to be skimmed.

Some HTML attributes are specifically for the accessibility tree. ARIA (Accessible Rich Internet Applications) attributes can be added to HTML tags to specify the corresponding node’s name or role. For instance, imagine our button above had an icon rather than text. Adding aria-label="sign up" to the button element would ensure that the button had a label for screen readers to represent to their users. Similarly, we can add alt attributes to image tags, thereby supplying a name to the corresponding accessible node and providing alternative text that lets screen reader users know what’s on the page.

The downside of the semantic approach is that it requires developers to use HTML tags and aria attributes in a way that matches their code’s intent. This, in turn, requires awareness among developers, and prioritization of accessibility by their teams. Lack of awareness and prioritization, rather than any technical limitation, is currently the main barrier to an accessible web.

So the current approach to assistive tech for the web is based on semantic web principles and baked into the design of browsers and HTML. Developers and their teams have to be aware of the accessibility features built into HTML to be able to take advantage of them.

Recommended reading: Accessibility APIs: A Key To Web Accessibility by Léonie Watson

AI Connections

Machine Learning (ML) and Artificial Intelligence (AI) come to mind when we read Berners-Lee’s remarks about the dream of the semantic web today. When we think of computers being intelligent agents analyzing data, we might think of this as being done via machine learning approaches. The early offscreen model approach we looked at used heuristics to classify visual information. This also feels reminiscent of machine learning approaches, except that in machine learning, heuristics to classify inputs are based on an automated analysis of previously seen inputs rather than hand-coded.

What if in the early days of figuring out how to make the web accessible we had been thinking of using machine learning? Could such technologies be useful now?

Machine learning has been used in some recent assistive technologies. Microsoft’s SeeingAI and Google’s Lookout use machine learning to classify and narrate objects seen through a smartphone camera. CTRL Labs is working on a technology that detects micro-muscle movements interpreted with machine learning techniques. In this way, it seemingly reads your mind about movement intentions and could have applications for helping with some motor impairments. AI can also be used for character recognition to read out text, and even translate sign language to text. Recent Android advances using machine learning let users augment and amplify sounds around them, and to automatically live transcribe speech.

AI can also be used to help improve the data that makes its way to the accessibility tree. Facebook introduced automatically generated alternative text to provide user images with screen reader descriptions. The results are imperfect, but point in an interesting direction. Taking this one step further, Google recently announced that Chrome will soon be able to supply automatically generated alternative text for images that the browser serves up.

What’s Next

Until (or unless) machine learning approaches become more mature, an accessible web depends on the API based on the accessibility tree. This is a robust solution, but taking advantage of the assistive tech built into browsers requires people building sites to be aware of them. Lack of awareness, rather than any technical difficulty, is currently the main challenge for web accessibility.

Key Takeaways

- Designing for different sets of abilities can give us new insights and lead to innovations that are broadly useful.

- The web was intended to be accessible from the start, and the history of the web is intertwined with the history of assistive tech for the web.

- Assistive tech for the web is baked into the current design of browsers and HTML.

- Designing assistive tech, particularly involving AI, is continuing to provide new insights and lead to innovations.

- The main current challenge for an accessible web is awareness among developers, designers, and product managers.

Resources

- Google’s web.dev accessibility guide is concise and has interactive Glitch demos.

- Accessibility Insights is a Chrome extension from Microsoft that highlights issues in the browser.

- To learn more about the accessibility tree, see Leonie Watson’s Smashing Magazine article “Accessibility API’s: A Key to Web Accessibility,” Marcy Sutton’s Egghead video “What is the Accessibility Tree?” and Rob Dodson’s “Why you can’t test a screen reader (yet)!”

- Read more about the social model of disability and how it can inform inclusive design in Kat Holmes’ book Mismatch: How Inclusion Shapes Design.

Further Reading

- Conducting Accessibility Research In An Inaccessible Ecosystem

- Mobile Accessibility Barriers For Assistive Technology Users

- Connecting With Users: Applying Principles Of Communication To UX Research

- Iconography In Design Systems: Easy Troubleshooting And Maintenance

SurveyJS: White-Label Survey Solution for Your JS App

SurveyJS: White-Label Survey Solution for Your JS App

Register for free to attend Axe-con

Register for free to attend Axe-con Celebrating 10 million developers

Celebrating 10 million developers